Content Hub ONE is a SaaS service that allows you to import and export data from and to the server for efficient development. In this article, we will introduce the command line tool that makes this possible.

CLI Installation

Content Hub ONE CLI is available for Windows, macOS and Linux, and is also available for Dokcer. NET 6 must be installed, as this tool is built using .

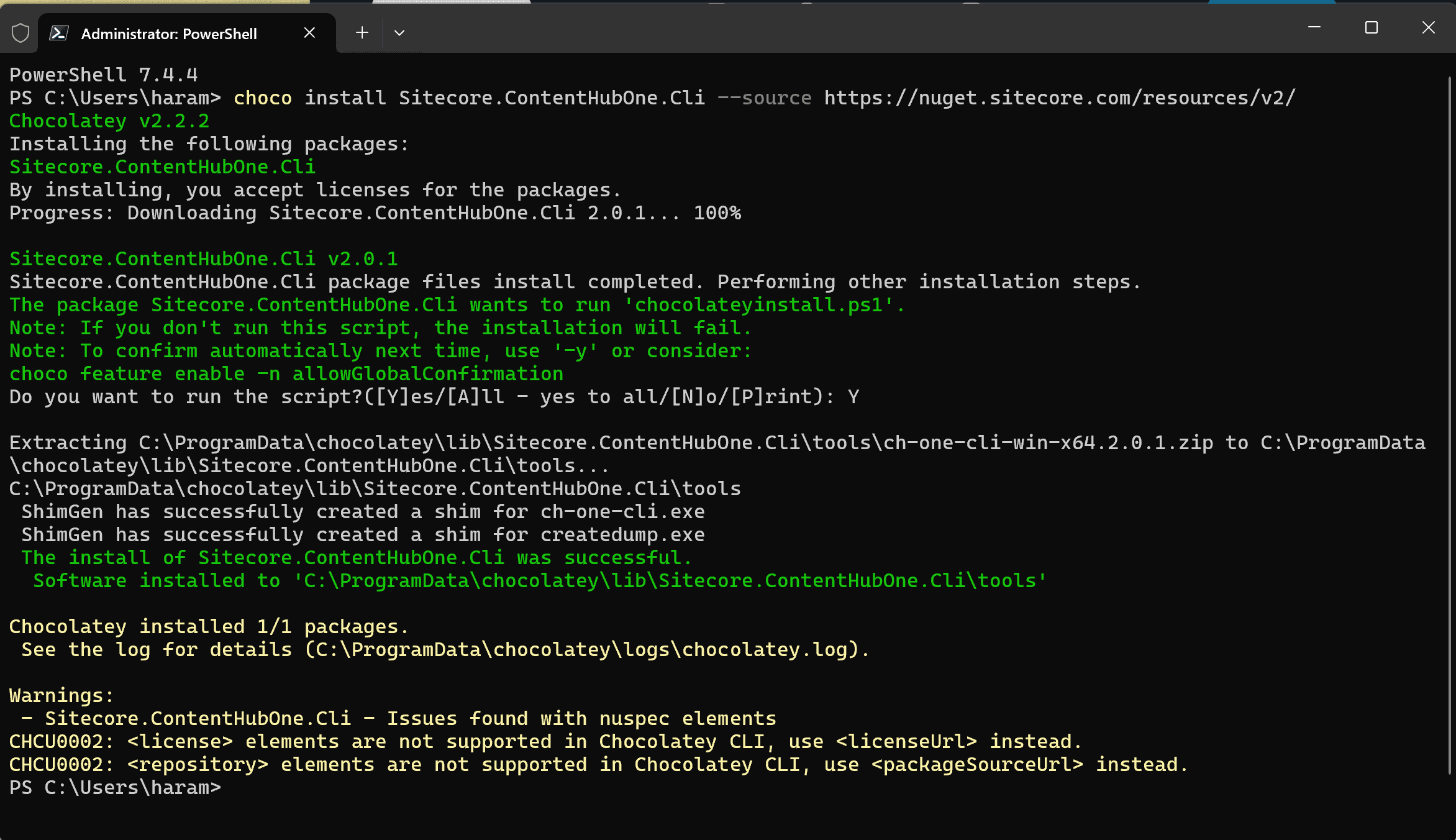

On Windows, the installation can be done using Chocolatey with the following command.

choco install Sitecore.ContentHubOne.Cli --source https://nuget.sitecore.com/resources/v2/The following is a screen shot of the installation in progress.

To use the tool on macOS, install as follows

brew tap sitecore/content-hub

brew install ch-one-cliAfter the installation was completed, I checked the version and found that 2.0.1 was installed at the time of writing this blog.

Login to Content Hub ONE

The procedure for logging in from the command line is described in the following page.

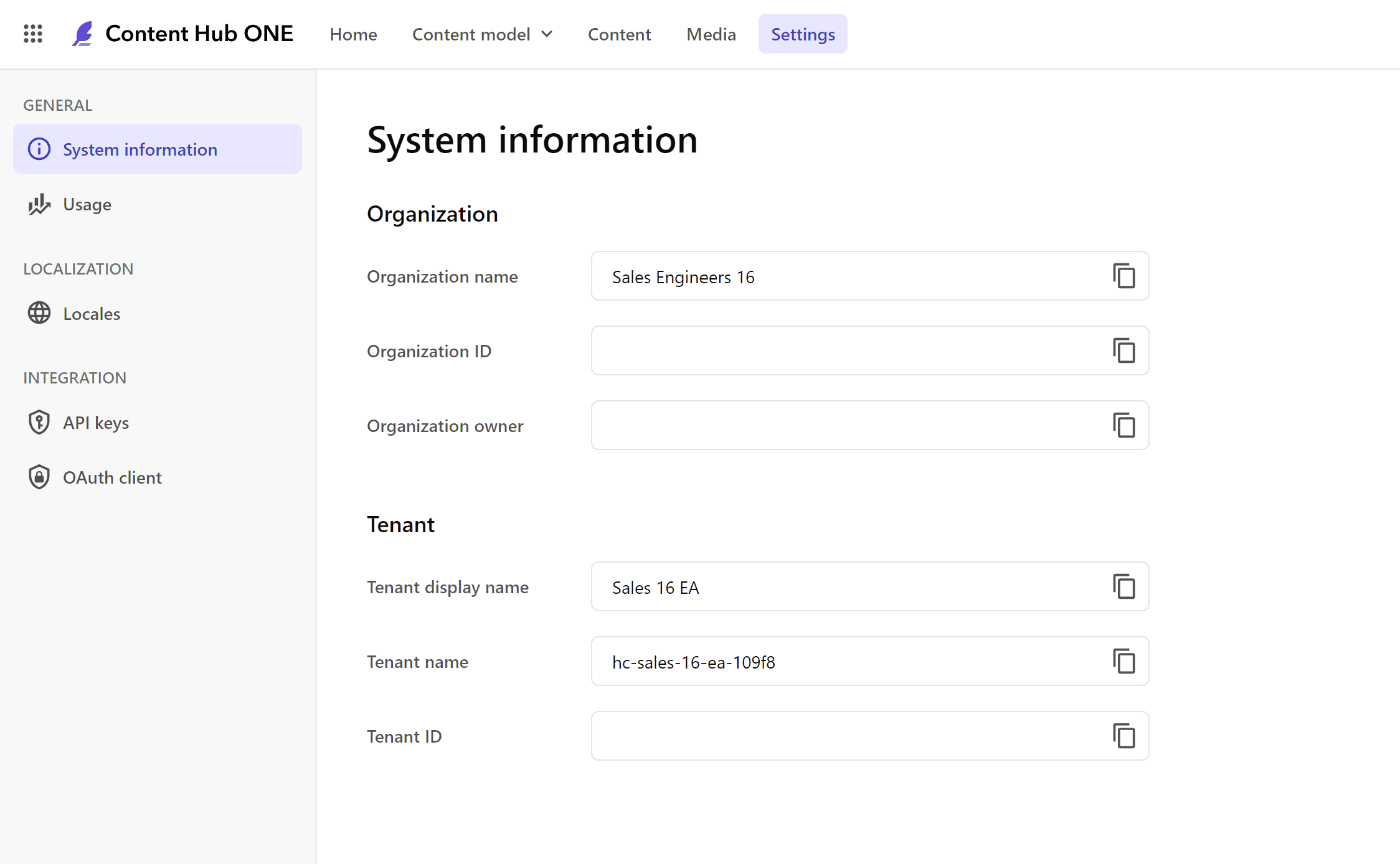

I will check the values required to log in with the tool. Click Settings from the Administration menu to bring up System Information. This screen shows mostly keys and other information, so we have cut that section out.

From this screen, organization-id and tenant-id can be obtained.

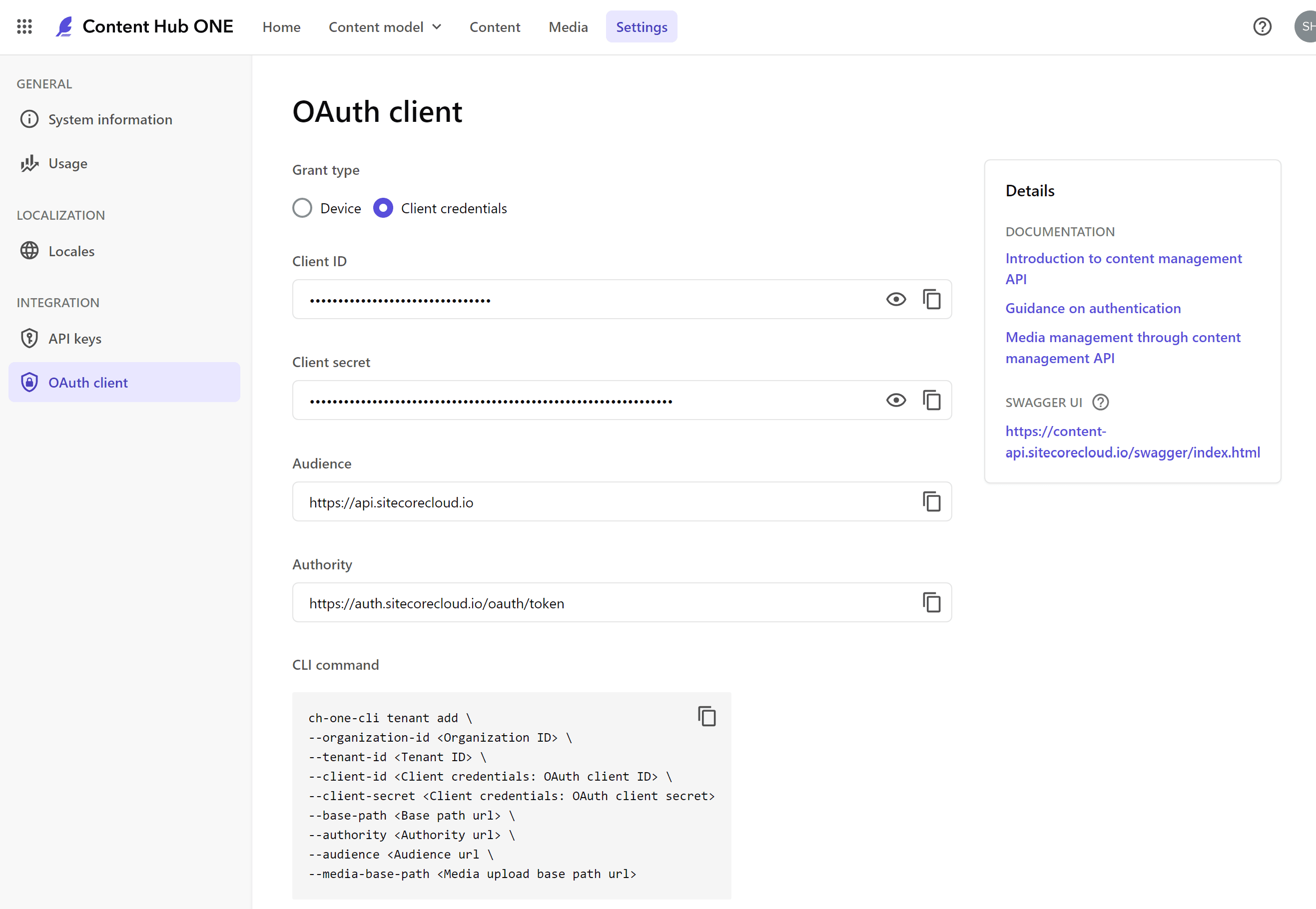

Next, click on Integration > OAuth client on the menu screen to open the page to browse client credentials.

From this page, you can check client-id and client-secret.

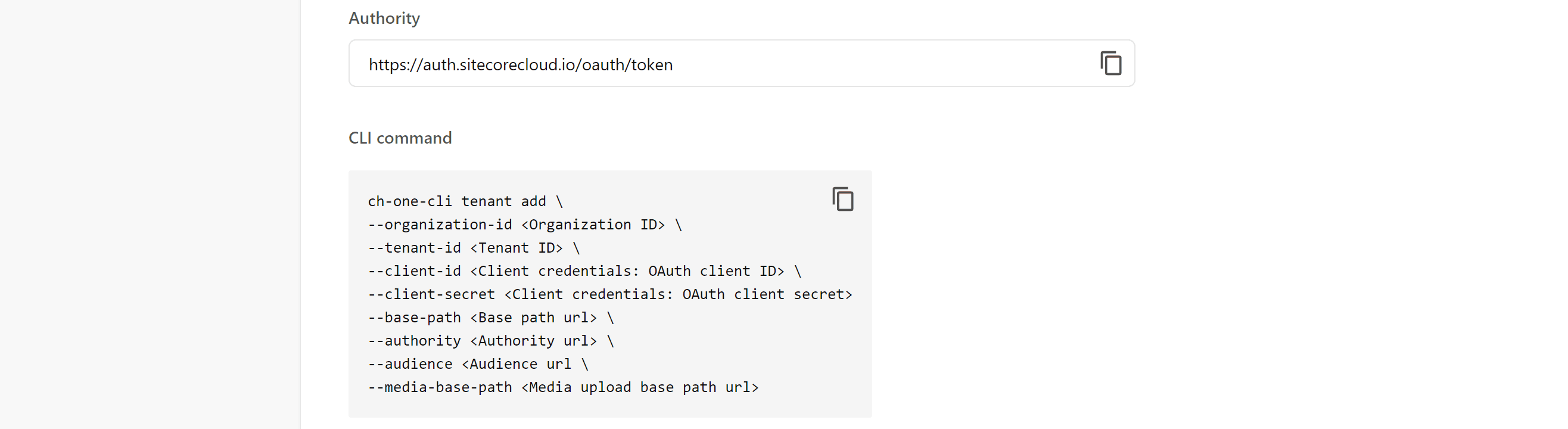

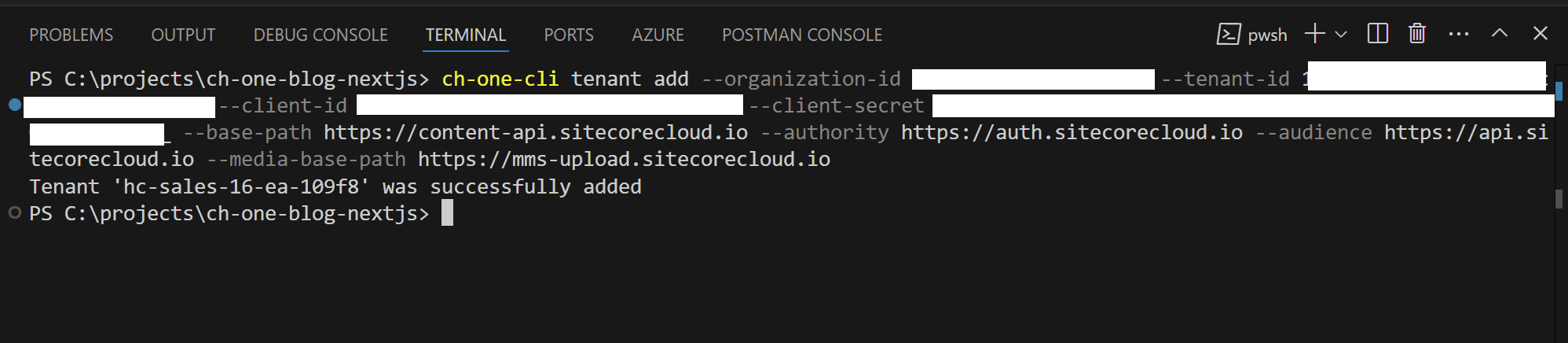

All that remains is to run it on the command line, which is actually the part at the bottom of this page that explains the comadon line.

Clicking on the copy icon here will save all the values to the clipboard in a set form, so you can run it by simply pasting it into a terminal or similar. The result of the execution will show you whether or not you were able to connect to the tenant and whether or not the login was successful.

More details can be found in the following pages.

Serialize

We would like to actually serialize the content types, content, and media that we have created in Content Hub ONE and create the data at hand.

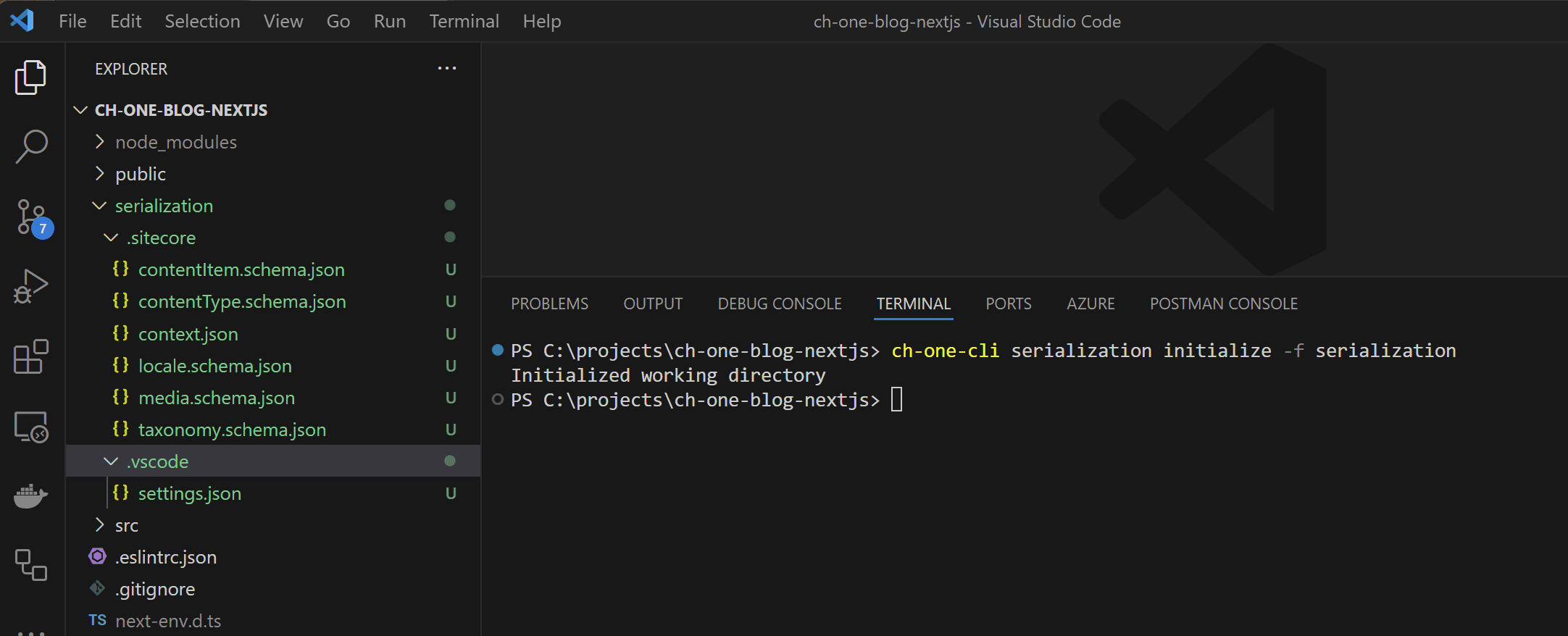

The first step is to create the folder where the serialization will take place. This step can be skipped, but in that case, it will work with the folder where you are executing the command. We want to keep the data we are serializing together in a folder, so we executed the following in this case.

ch-one-cli serialization initialize -f serializationThis will create a folder as shown below.

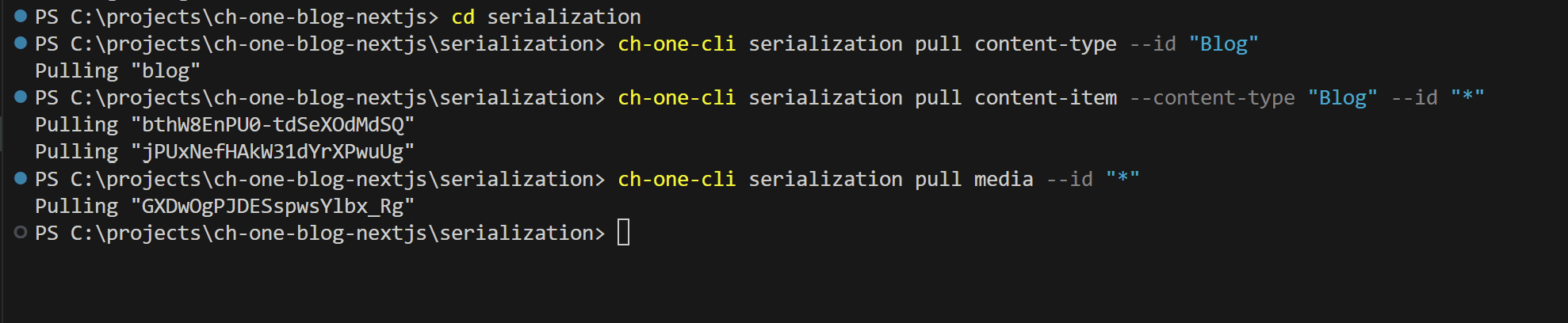

Go to the folder you created and pull to get the Blog content type.

cd serialization

ch-one-cli serialization pull content-type --id "Blog"This is all we have in this case because we only created one, but you can also use wildcards to get them.

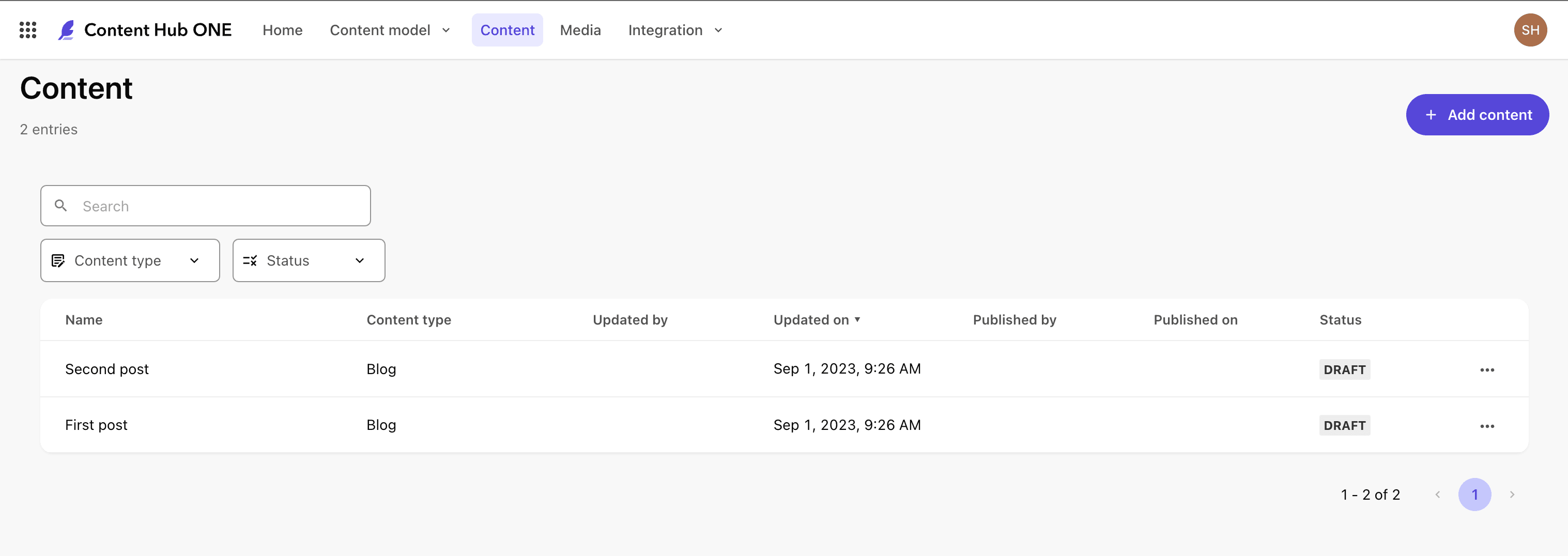

Next, content is retrieved. In this case, since we are creating two contents, we specify the content type and retrieve all data.

ch-one-cli serialization pull content-item --content-type "Blog" --id "*"Finally, retrieve the media file.

ch-one-cli serialization pull media --id "*"Everything is now on file.

Push Serialized data

Since the amount of data we are creating is still small, we will delete all the data in Content Hub ONE and send data to it by push. First, when deleting, we will unpublish content that has already been published and make it private, then delete the content, media, and content type.

The first step is to return the media to the empty Content Hub ONE.

ch-one-cli serialization push media --id "*"You can see that the files have been restored from the empty state of the media.

In a similar procedure, data is sent by push in the order of content type, then content.

ch-one-cli serialization push content-type --id "Blog"

ch-one-cli serialization push content-item --content-type "Blog" --id "*"The content was successfully restored. In this case, since everything is uploaded as a Draft, it is necessary to publish it separately.

For more detailed instructions, please refer to the following pages for use.

Summary

A convenient command line makes it possible to export and import the data you have created. The ability to manage the data as code makes it possible to manage the data in conjunction with Next.js and other projects.